yuns

6.1 Gated Graph Neural Networks 본문

graph deep learning/#6 Graph Recurrent Networks

6.1 Gated Graph Neural Networks

yuuuun 2020. 11. 20. 15:52반응형

Introduction

The trend to use the mechanism from RNNs like GRU ror LSTM in the propagation step to diminish the restictions from the vanilla GNN model and improve the effectiveness of the long-term information propagation across the graph.

6.1 Gated Graph Neural Networks

- GGNN which uses the Gate Recurrent Units (GRU) in the propagation step.

- unrolls the RNN for a fixed number of T steps and backpropagates through time to compute gradients.

- Basic Recurrence of the propagation model $$ a_v^t = A_v^T [h_1^{t-1} \ldots h_N^{t-1}]^T + b$$ $$z_v^t = \sigma(W^za_v^t + U^zh_v^{t-1})$$ $$r_v^t = \sigma(W^ra_v^t + U^r h_v^{t-1})$$ $$\tilde{h_v^t} = tanh(Wa_v^t + U(r_v^t \odot h_v^{t-1}))$$ $$h_v^t = (1-z_v^t)\odot h_v^{t-1} + z_v^t \odot \tilde{h_v^t}$$

- The node v first aggregates message from its neighbors ($A_v$: the sub-matrix of the graph adjacency matrix A and denotes the connection of node v with its neighbors.

- The GRU-like update functions use information from each node's neighbors and from the previous timestep to update node's hidden state

- Vector v: gathers the neighborhood information of node $v, z$ and $r$ are the update and reset gates

- $\odot$: the Hardamard product operation

- GGNN model is designed for problems defined on graphs which require outputting sequences while existing models focus on producing single outputs such as node-level or graph-level classifications.

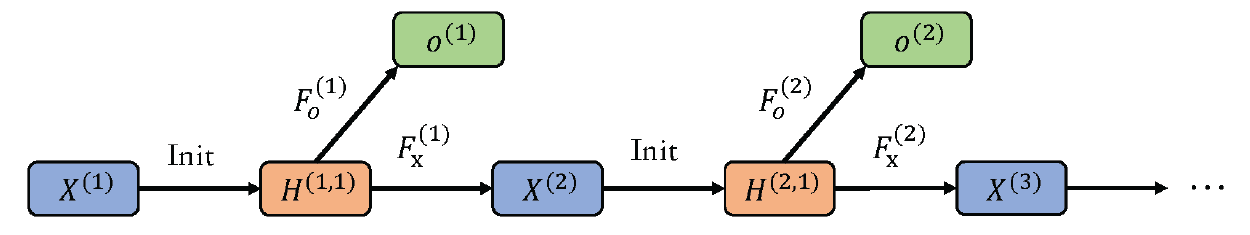

- GGS-NNs(Gated Graph Sequence Neural Networks) which uses several GGNNs to produce an output sequence $o^{(1)}\ldots o^{(K)}$

- for k-th output step, the matrix of node annotation: $X^{(k)}$

- Two GGNNs are used in this architecture

- $F_o{(k)}$ for predicting $o^{(k)}$ from $X^{(k)}$

- $F_x^{(k)}$ for predicting $X^{(k+1)}$ from $X^{(k)}$

- $H^{(k,t)}$: to denote the t-th propagation step of the k-th output step

- The value of $H^{(k,1)}$ at each step k is initialized by $X^{(k)}$.

- The value of $H^{(t,1)}$ at each step t is initialized by $X^{(t)}$

반응형

'graph deep learning > #6 Graph Recurrent Networks' 카테고리의 다른 글

| 6.4 Sentence LSTM (0) | 2020.11.22 |

|---|---|

| 6.3 Graph LSTM (0) | 2020.11.22 |

| 6.2 Tree LSTM (0) | 2020.11.20 |

Comments