yuns

[KDD20] AM-GCN: Adaptive Multi-channel Graph Convolutional Networks 본문

[KDD20] AM-GCN: Adaptive Multi-channel Graph Convolutional Networks

yuuuun 2020. 12. 10. 16:06Author: Xiao Wang, Meiqi Zhu, Deyu Bo, Peng Cui, Chuan Shi, Jian Pei

Introduction

Graph Convolutional Networks(GCNs): a class of neural networks designed to learn graph data.

- applications: social networks, biology networks, citation networks.

- Message-passing manner: feature aggregation

- node aggregates feature information from its topological neighbors in each convolutional layer. (이웃 정보로부터 feature information aggregate)

- Feature information propagates over network topology to node embedding, and then node embedding learned as such is used in classification tasks. (feature information은 network topology를 통하여 node embedding으로 전파, 이 후 학습된 node embedding이 classification task에 사용됨)

- node label에 의해 supervised partially.

- Fusion of GCN

- topological structure와 node feature to learn node embedding

- fusion process is supervised by end-to-end learning framework.

Weakness of the state-of-the-art GCNs in fusing node features and topological structures.

- node feature에 Laplacian smoothing을 사용하기 때문에 전체적인 network을 converge하게 만듦

- topological structures play the role of low-pass filtering(데이터 손실 발생) on node features

Motivation

- What information do GCNs really learn and fuse from topological structures and node features?

Fusion strategy on topological structures and node features to learn node embedding, and the fusion process is supervised by an end-to-end learning framework.

Fusion Capability of GCNs: An experimental investigation

GCN이 그래프의 node features과 topological structures에서 adaptively 학습가능한지 여부 확인 및 classification에서 적절하게 fuse.

- adaptive learning: 알고리즘을 사용하여 학습자와의 상호작용을 조정하고 고유한 요국사항을 해결하기 위하여 맞춤형 리소스 및 학습 활동을 제공하는 교육방법

- adaptive algorithm is an algorithm that changes its behavior at the time it is learn.

high correlation between node label with network topology and node features, respectively.

(node label과 network topology 및 node features 간 높은 correlation을 명확하게 설정

[Case 1] Random Topology and Correlated Node Features

[Case 2] Correlated Topology and Random Node Features

Summary

- The current fusion mechanism of GCN is distant from optimal

- Even the correlation between node label with network topology or node featuers is very high, the current GCN cannot make full use of the supervision by node label to adaptively extract the most correlated information

- Rethink the current mechanism of GCN because it is hard to know whether the topology or the node features are more correlated with the final task

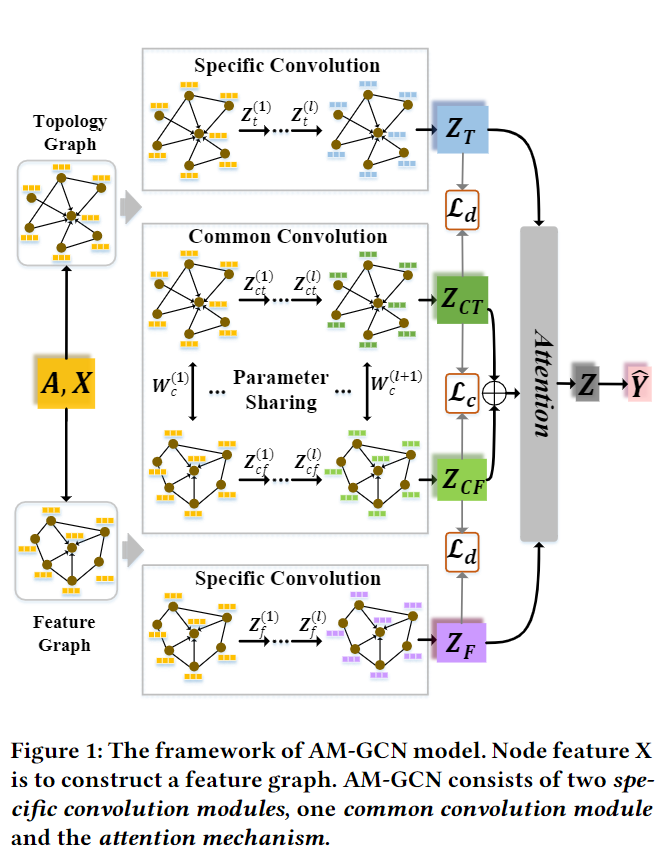

AM-GCN: The Proposed Model

Problem Settings

- Focus on semi-supervised node classification

- Graph $G=(A,X)$

- $A\in \mathbb{R}^{n\times n}$: the symmetric adjacency matrix with $n$ nodes

- $X\in \mathbb{R}^{n\times d}$: the node feature matrix, $d$: dimension of node feature

- Key Idea

- AM-GCN permits node features to propagate in topology space and feature space.

- the most correlated information with node label should be extracted from both of two spaces

- Construction of a feature graph based on node features X.

- X is able to propagate over both of feature graph and topology graph to learn two specific embedding $Z_F$ and $Z_T$, respectively.

- two spaces have common characteristics, a common convolution module with parameter sharing strategy to learn the common embedding $Z_{CF}$ and $Z_{CT}$.

- a consistency constrraint $\mathcal{L}_c$ is employed to enhance the "common" property of $Z_{CF}$ and $Z_{CT}$.

- a disparaity constraint $\mathcal{L}_d$ is to ensure the independence between $Z_F$ and $Z_{CF}$ as well as $Z_C$ and $Z_{CT}$.a

- Considering that node label may be correlated with topology or feature or both, AM-GCN utilizes an attention mechanism to adaptively fuse these embeddings with the learned weights, so as to extract the most correlated information Z for the final classification task.

- Graph $G=(A,X)$

3.1 Specific Convolution Module

1) Feature Space

- Feature정보를 이용하여 edge는 Similarity로 계산하여 새로운 그래프 형성

- First, construct a kNN graph $G_f=(A_f, X)$ based on node feature matrix X

- $A_f$ is the adjacency matrix of kNN graph.

- Calculate the similarty matrix $S\in \mathbb{R}^{n\times n}$ among $n$ nodes and $S$ can be calculated in two ways.

- Cosine Similarity $$S_{ij} = \frac{x_i \dot x_j}{|x_i||x_j|}$$

- Heat Kernel

- $t$: the time parater in heat conduction equation. Here, it is set as 2 $$S_{ij} = e^{-\frac{||x_i - x_j||^2}{t}}$$

- Here, choose cosine similarity to obtain the similarty matrix S

- Choose top-k similar node paris for eafch node to set edges and get adjacency matrix $A_f$.

- input graph $(A_f, X)$, the $l$-th layer output $Z_f^{(l)} = ReLU(\tilde{D}_f^{-\frac{1}{2}}\tilde{A}_f \tilde{D}_f{-\frac{1}{2}} Z_f^{(l-1)}W_f^{(l)})$

- $W_f^{(l)}$: the weight matrix of the $l$-th layer in GCN

- initial $Z_f^{(0)}=X$

- $\tilde{A}_f = A_f + I_f$ and $\tilde{D}_f$: the diagonal degree matrix of $\tilde{A}_f$

- $Z_F$: the last layer output embedding as $Z_F$

2) Topology Space

- original input graph $G_t = (A_t, X_t)$

- $A_t = A$ and $X_t = X$

- the learned output embedding $Z_T$ based on topology graph can be calculated in the same way as in feature space.

3.2 Common Convolution Module

- Need to extract the node specific embedding in two spaces and the common information shared by the two sapces.

- Common-GCN: with parameter sharing strategy to get the embedding shared in two spaces.

- utilize Common-GCN to extract the node embedding $Z_{ct}^{k} from topology graph $(A_t, X)$ $$Z_{ct}^{(l)} = ReLU(\tilde{D}_t^{-frac{1}{2}} \tilde{A}_t \tilde{D}_t^{-frac{1}{2}} Z_{ct}^{(l-1)}W_c^{(l)}$$

- $W_c{(l)}$: the $l$-th layer weight matrix of Common-GCN

- $Z_{ct}^{(l-1)}$: the node embedding in the $(l-1)$-th layer

- $Z_{ct}^{(0)}=X$

- utilize Common-GCN to learn the node embedding from feature graph $(A_f, X)$ in order to extract the shared information, share same weight matrix $W_c^{(l)}$ for every layer $$Z_{cf}^{(l)} = ReLU(\tilde{D}_t^{-frac{1}{2}} \tilde{A}_t \tilde{D}_t^{-frac{1}{2}} Z_{cf}^{(l-1)}W_c^{(l)}$$

- $Z_{cf}^{(l)}$: the $(l)$-th layer out embedding

- $Z_{cf}^{(0)}=X$

- The shared weighte materix can filter out the shared characteristics from two spaces.

- The common embedding $$Z_C = \frac{Z_{CT}+Z_{CF}}{2}

- utilize Common-GCN to extract the node embedding $Z_{ct}^{k} from topology graph $(A_t, X)$ $$Z_{ct}^{(l)} = ReLU(\tilde{D}_t^{-frac{1}{2}} \tilde{A}_t \tilde{D}_t^{-frac{1}{2}} Z_{ct}^{(l-1)}W_c^{(l)}$$

3.3 Attention Mechanism

3.4 Objective Function

'paper study > graph' 카테고리의 다른 글

| Q-Learning (0) | 2020.12.13 |

|---|---|

| DQN(Deep Q-Networks) (0) | 2020.12.13 |

| Markov Decision Process (0) | 2020.12.13 |